Jan 15, 2026

7 min read

Why the US Ranks 24th in AI Adoption (Despite Leading in AI Development)

The United States is home to OpenAI, Anthropic, Google DeepMind, and Meta AI. It produces more AI research papers than any other country. Its companies lead global funding rounds, and its models power most enterprise software worldwide.

And yet, according to the Microsoft AI Economy Institute's latest Global AI Diffusion Report, the US ranks just 24th in AI adoption, down from 23rd six months ago.

This is not a data error. It is a signal that building AI and using AI are fundamentally different problems. The US has solved the first. It is struggling with the second.

For enterprise leaders, this gap should feel familiar. You have access to GPT-4, Claude, Gemini. Your teams have experimented with automation pilots. Your vendors promise AI-powered everything. Yet when you audit what is actually running in production, the answer is often: not much.

The countries leading global AI adoption did not get there by building better models. They got there by making AI actually work in daily operations. And that distinction has everything to do with how your organization should think about AI implementation.

What the Data Actually Shows

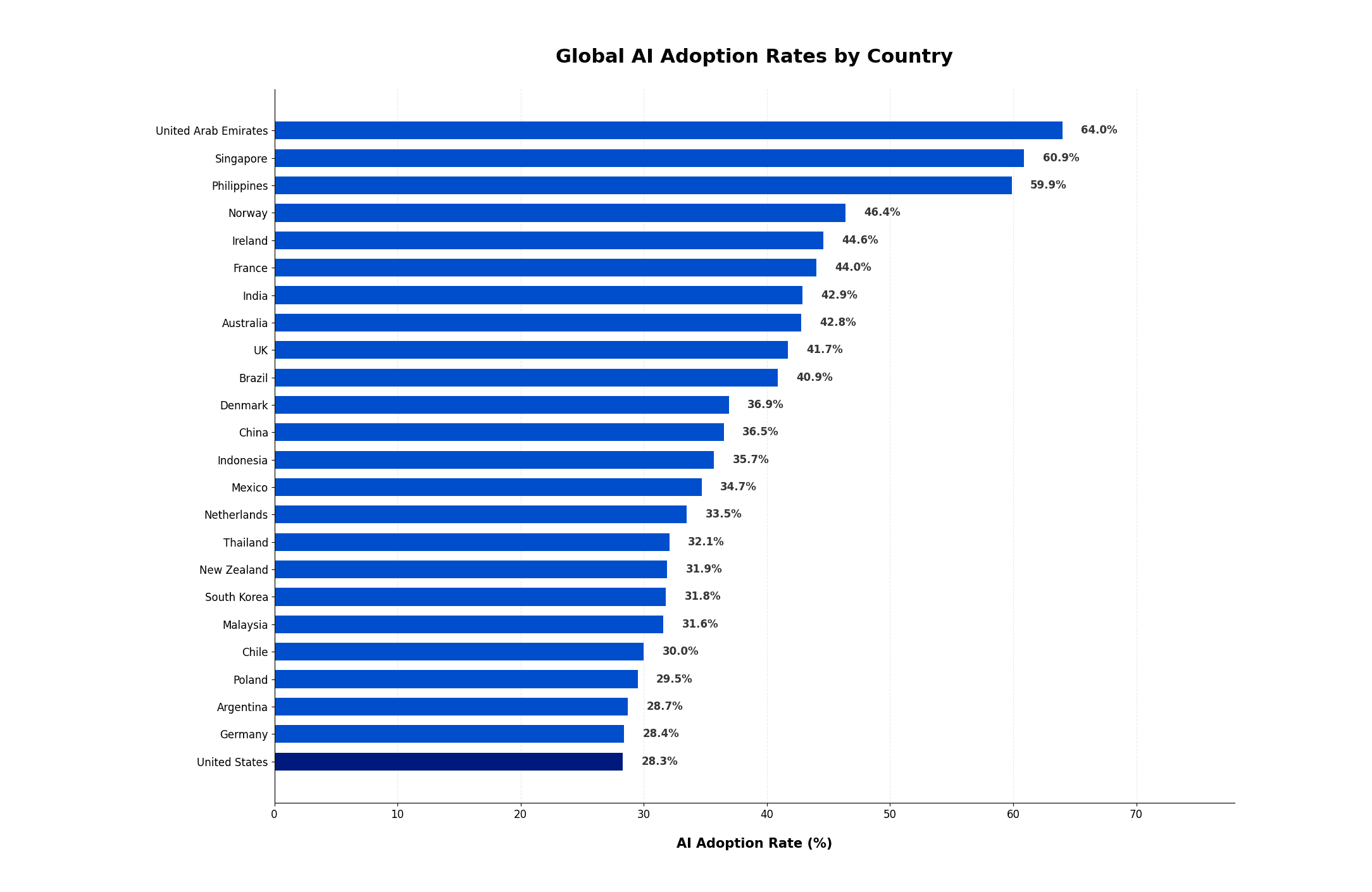

The Microsoft report surveyed over 37,000 people across 37 countries, measuring AI usage across work, personal tasks, and creative applications. Here is what they found:

Source: Microsoft AI Economy Institute, Global AI Diffusion Report 2025

The global adoption rate rose from 15.1% to 16.3% in six months; meaningful progress, but not the acceleration many predicted.

The UAE leads the world at 64% adoption, followed by Singapore (60.9%) and the Philippines (59.9%). These are not the countries producing the models. They are the countries deploying them.

The US sits at 28.3%, below the global north average of 24.7%. This is not because Americans lack access to AI tools, they arguably have more access than anyone. It is because access and adoption are not the same thing.

The gap between the global north and global south widened from 9.8 to 10.6 percentage points. Countries with less AI infrastructure are, in some cases, adopting faster than countries with more.

The report's most striking finding: South Korea jumped seven spots in six months, moving from 25th to 18th with a 4.8 percentage point increase, the largest gain of any country surveyed. That kind of movement does not come from incremental improvement. It comes from systematic deployment.

Why the US Is Falling Behind (And What It Reveals About Enterprise AI)

The US paradox (leading in AI development while lagging in AI usage) has three root causes that directly mirror enterprise challenges.

1. Trust Is the Bottleneck, Not Technology

The Edelman Trust Barometer found that just 32% of Americans trust AI, compared to 67% in the UAE. This is not about capability. It is about confidence.

In enterprises, this manifests as the pilot-to-production gap. Teams run experiments, executives get demos, but when it comes to letting AI handle real workflows with real consequences, organizations hesitate. The question is not can AI do this but do we trust AI to do this.

Countries with high adoption rates, UAE, Singapore, South Korea, have invested heavily in building institutional confidence through clear governance frameworks, public sector leadership, and visible success stories. They made trust a deployment strategy, not an afterthought.

2. Deployment Infrastructure Matters More Than Model Access

Every enterprise in the US can access GPT-4 today. So can enterprises in the UAE. The difference is what happens next.

High-adoption countries have built deployment ecosystems: standardized integration patterns, trained implementation teams, documented best practices, and feedback loops that turn initial rollouts into scaled operations.

In contrast, many US enterprises treat each AI project as a bespoke experiment. They rebuild the same infrastructure, retrain the same teams, and relearn the same lessons with every new use case. This is why 40% of agentic AI projects will fail by 2027, not because the technology fails, but because the deployment approach does.

3. The Demo-to-Production Gap Is Real

The AI adoption report captures something enterprises experience daily: the gap between what AI can do in a demo and what AI does in production.

Microsoft's data shows that global AI adoption rose only 1.2 percentage points in six months—far below what technology availability would predict. If access were the constraint, we would see faster growth. The real constraint is operationalization: getting AI to work reliably, at scale, in complex environments.

This is the same dynamic that produces the statistic everyone quotes but few internalize: 87% of AI projects never make it to production. Not because the models are not good enough. Because the deployment is not good enough.

What High-Adoption Countries Do Differently

The UAE's position at the top of the adoption rankings is not accidental. It reflects a decade of strategic investment in making AI operational, not just available.

The UAE's approach to AI-powered government services prioritized deployment from the start. When they launched AI initiatives, they did not just provide tools—they built the institutional infrastructure to use them: training programs, governance frameworks, integration standards, and accountability structures.

Singapore followed a similar playbook. Their Smart Nation initiative did not focus on building models. It focused on building deployment capacity across government and industry, creating standardized patterns that any organization could adopt.

South Korea's dramatic rise in the rankings reflects the same strategy at accelerated pace. Their government-led AI adoption push combined regulatory clarity with deployment support, giving enterprises a clear path from experimentation to production.

The lesson is not that government intervention is the answer. It is that deployment requires infrastructure, and infrastructure requires investment, whether from government, industry associations, or individual enterprises.

The DeepSeek Effect: Why Open-Source Is Reshaping Adoption Patterns

One finding in the Microsoft report deserves special attention: DeepSeek, the Chinese open-source model, shows 2-4x higher usage rates in Africa compared to other regions.

This is significant because it suggests that adoption patterns are shifting in ways that established AI leaders may not anticipate. When deployment barriers are high (cost, integration complexity, vendor lock-in), open-source alternatives gain traction, not because they are better, but because they are more deployable.

For enterprises, this is a reminder that the winning AI strategy is not always the most advanced AI strategy. It is the one that actually gets deployed.

What This Means for Enterprise AI Strategy

The US ranking should serve as a warning for enterprise leaders who assume that access equals adoption.

The question is not do we have AI tools. Most enterprises have access to more AI capabilities than they can use. The question is what is our deployment infrastructure.

Here is what high-adoption organizations, whether countries or companies, do differently:

They invest in deployment, not just experimentation. Every pilot includes a production path. Every proof-of-concept includes an operationalization plan. The goal is not to prove AI works, it is to make AI work.

They standardize integration patterns. Rather than treating each use case as unique, they build reusable infrastructure that makes the next deployment faster than the last. This is how enterprises actually scale AI.

They build trust systematically. This means governance frameworks, clear accountability, transparent performance metrics, and gradual expansion from low-stakes to high-stakes applications.

They focus on AI agents that complete workflows, not just individual tasks. The gap between 16% global adoption and 64% UAE adoption is not explained by tool access. It is explained by how deeply AI is integrated into actual work processes.

The Production Gap Is the Opportunity

The US ranking reveals an uncomfortable truth: being good at building AI does not make you good at using AI. These are different competencies, and most organizations have invested heavily in the first while underinvesting in the second.

For enterprise leaders, this is actually good news. The competitive advantage in AI is not about model access, everyone has that. It is about deployment capacity. And deployment capacity can be built.

The countries winning at AI adoption are not the ones with the most advanced models. They are the ones who made AI actually work in daily operations.

Your organization faces the same choice. You can continue experimenting with AI, running pilots that impress executives but never reach production. Or you can invest in the deployment infrastructure that turns AI capability into AI results.

The technology is no longer the bottleneck. The gap between what AI can do and what AI does do is closing, but only for organizations that prioritize making AI work, not just making AI available.