Have you ever wondered why no single AI model can handle every task perfectly? 🤔

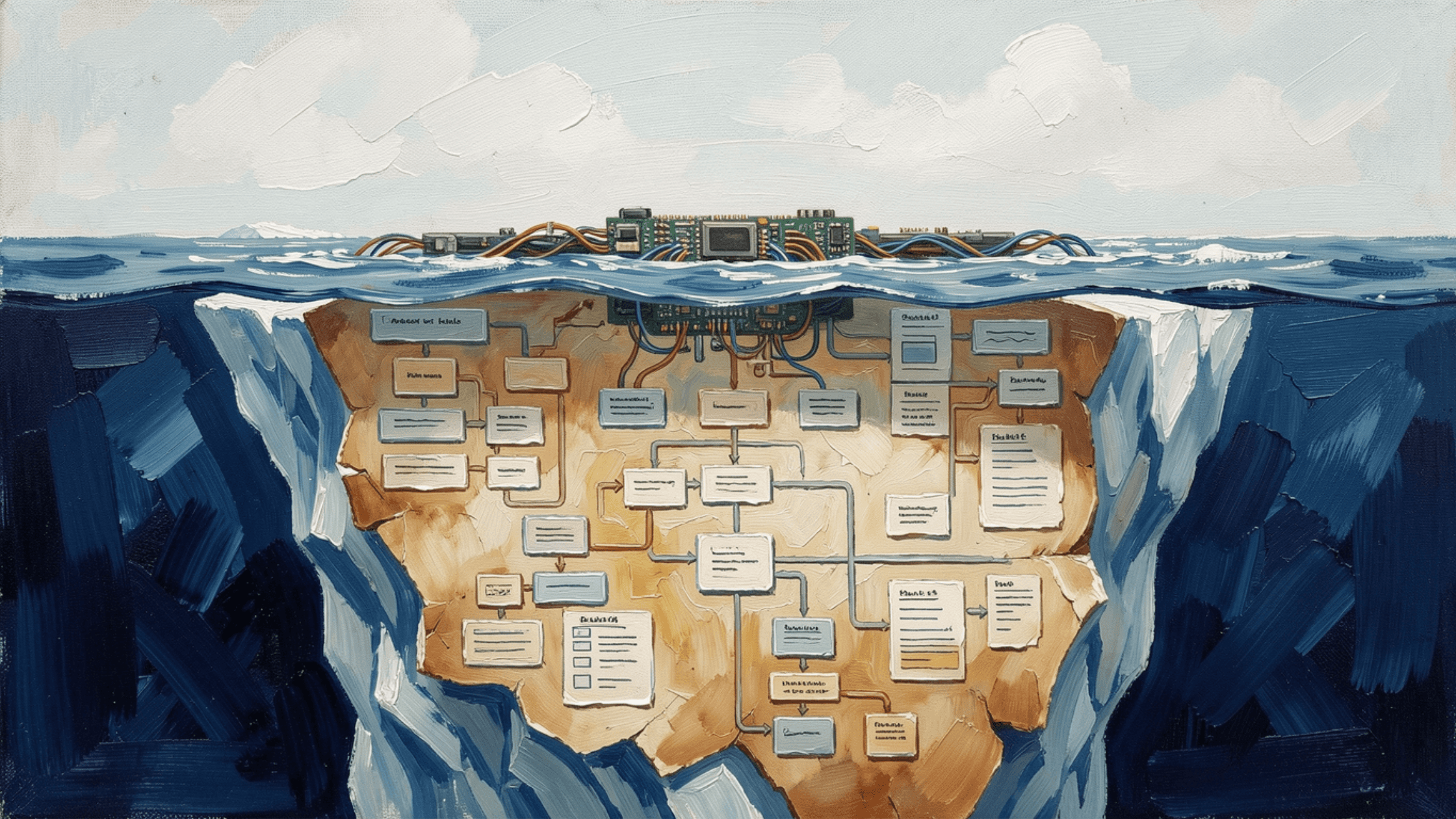

At Beam AI, we are continuously enhancing our AI agents by leveraging the strengths of multiple Large Language Models (LLMs) through our innovative approach known as ModelMesh. This method allows us to dynamically combine models to meet diverse use cases, ensuring that our solutions are both reliable and cost-effective.

The Need for a Multi-Model Strategy

In practice, no single model excels at every task. Therefore, we employ a multi-model strategy that balances precision, performance, and cost. ModelMesh empowers our AI agents to select the most effective model—or combination of models—based on the specific requirements of each task.

Key Insights: Dynamic Model Selection

ModelMesh integrates various LLMs and private models, intelligently switching between them rather than relying on a one-size-fits-all solution. For instance:

GPT-4o: Best for complex reasoning and decision-making, making it ideal for tasks that require deep understanding and analysis.

Claude 3.5: Reliable for data processing and extraction, ensuring that information is accurately gathered and presented.

Command R: Optimized for retrieval-augmented generation (RAG) workflows, enhancing the ability to pull relevant data quickly.

GPT-4o-mini: Ideal for low-latency tasks where speed is critical, allowing for rapid responses without significant accuracy loss.

Private Models (e.g., Mistral): Offer flexibility, privacy, and control for specialized applications, catering to unique organizational needs.

Performance vs. Cost Trade-Offs

By dynamically combining models, we minimize unnecessary resource usage. When appropriate, our agents default to lighter, faster models, reducing latency while maintaining quality.

Scalable and Modular Design

ModelMesh is designed for scalability and modularity, allowing for plug-and-play support of new models. This flexibility enables us to quickly integrate innovations in the LLM landscape without being confined to a single technology stack.

Bring Your Own Model (BYOM)

Organizations can seamlessly integrate their proprietary models into ModelMesh, creating highly customized AI workflows tailored to their specific needs.

Real-World Applications

A typical workflow utilizing ModelMesh might include:

Command R initiating retrieval tasks in RAG.

Claude 3.5 processing and cleaning data for subsequent steps.

GPT-4o managing complex reasoning tasks.

GPT-4o-mini ensuring rapid responses when speed is critical.

By optimizing our agents for both precision and efficiency, we are pushing the boundaries of what is possible with AI workflows. These are exciting times in AI engineering, and we look forward to learning from others facing similar challenges with multi-model systems!