05.09.2025

1 Min. Lesezeit

Agentic KI im Jahr 2026: Warum 90% der Implementierungen scheitern (und wie man zu den 10% gehört)

Jede Woche kündigt ein weiteres Unternehmen seine "bahnbrechende agentische KI-Einführung" an. Bis zum dritten Monat stellen die meisten es stillschweigend ein. Hier ist der Grund dafür und wie Sie die Chancen verbessern können.

Die unangenehme Wahrheit über Erfolgsraten von agentischer KI

Wenn Sie dies lesen, haben Sie wahrscheinlich die Schlagzeilen gesehen: "KI-Agenten werden das Geschäft transformieren", "Agentische KI ist die Zukunft der Automatisierung", "Unternehmen, die KI-Agenten verwenden, verzeichnen Produktivitätssteigerungen von 40%."

Alles stimmt. Aber hier ist, was sie Ihnen nicht erzählen.

Über 80% der KI-Implementierungen scheitern innerhalb der ersten sechs Monate, und agentische KI-Projekte stehen vor noch größeren Herausforderungen, wobei Forschungen des MIT darauf hinweisen, dass 95% der Unternehmens-KI-Piloten die erwarteten Renditen nicht liefern.

Nicht, weil die Technologie nicht funktioniert. Nicht, weil die Anwendungsfälle nicht real sind. Sondern weil die meisten Unternehmen agentische KI so angehen, als wäre es nur eine weitere Software-Implementierung.

Ist es aber nicht. Forschung der RAND Corporation bestätigt, dass KI-Projekte doppelt so häufig scheitern wie traditionelle IT-Projekte, über 80% gelangen nie in einen sinnvollen Produktionseinsatz.

Dies ist kein weiteres Denkstück über die Zukunft der KI. Dies ist ein praktischer Leitfaden, der auf dem basiert, was wir aus Hunderten von echten Implementierungen gelernt haben, den Misserfolgen und den seltenen Erfolgen.

Was genau ist agentische KI?

Bevor wir darauf eingehen, warum die meisten Projekte scheitern, lassen Sie uns klären, worüber wir eigentlich sprechen.

Agentische KI-Plattformen können:

Ziele eigenständig setzen und verfolgen (nicht nur Skripten folgen)

In Echtzeit Entscheidungen treffen basierend auf sich ändernden Bedingungen

Aus jeder Interaktion lernen und sich verbessern

Nahtlos mit anderen Systemen und Menschen koordinieren

Denken Sie daran als den Unterschied zwischen einem Taschenrechner und einem Finanzanalysten. Der eine führt Befehle aus; der andere denkt, plant und passt sich an.

Das Versprechen? KI-Agenten, die wie Ihre besten Mitarbeiter arbeiten, Kontext verstehen, kluge Entscheidungen treffen und mit der Zeit besser werden.

Die Realität? Die meisten Implementierungen produzieren teure, unzuverlässige Software, die sofort auseinanderbricht, wenn etwas Unerwartetes passiert.

Die 5 Gründe, warum 90 % der agentischen KI-Projekte scheitern

1. Sie behandeln es wie traditionelle Automatisierung

Der Fehler: Unternehmen gehen agentische KI an wie RPA oder Workflow-Automatisierung — Prozess abbilden, Bot erstellen, bereitstellen und vergessen.

Warum es scheitert: Agentische Systeme benötigen kontinuierliches Training, Grenzsetzung und stetige Verfeinerung. Sie sind keine „Einrichten und Vergessen“-Tools.

Unternehmen setzen oft Agenten ein, ohne Randfälle zu berücksichtigen. Wenn Systeme unerwartete Szenarien antreffen, brechen sie zusammen, was manuelle Eingriffe erforderlich macht und den Zweck der Automatisierung vereitelt.

Die Lösung: Behandeln Sie agentische KI wie die Einarbeitung eines neuen Mitarbeiters, nicht wie eine Softwareinstallation. Budgetieren Sie für Training, Iteration und ständige Verbesserung.

2. Keine klaren Erfolgskriterien

Der Fehler: Starten mit vagen Zielen wie „Produktivität steigern“ oder „Kosten senken“.

Warum es scheitert: Ohne spezifische, messbare Ergebnisse können Teams nicht erkennen, ob der Agent tatsächlich arbeitet oder nur teure, unnötige Arbeit erzeugt.

Viele Projekte scheitern, weil Teams sich auf technische Fähigkeiten konzentrieren statt auf messbare Geschäftsergebnisse, wodurch es unmöglich wird zu bestimmen, ob die Investition sinnvoll war.

Die Lösung: Definieren Sie genaue Kennzahlen, bevor die Entwicklung beginnt. „Verkürzen Sie die Rechnungsverarbeitungszeit von 8 Tagen auf 2 Tage bei gleichzeitiger Beibehaltung einer Genauigkeit von 99,5 %.“

3. Ignorieren des menschlichen Faktors

Der Fehler: Agenten bauen, die Menschen ersetzen, ohne sie in den Designprozess einzubeziehen.

Warum es scheitert: Mitarbeiter sabotieren entweder das System oder verlassen es, wenn es nicht mit der tatsächlichen Arbeitsweise übereinstimmt.

Erfolgreiche Implementierungen wie Avi Medical haben Endnutzer von Anfang an einbezogen, um sicherzustellen, dass die KI-Agenten nahtlos in bestehende Workflows integriert und die tatsächlichen Bedürfnisse der Benutzer erfüllt wurden.

Die Lösung: Entwerfen Sie Agenten als Mitarbeiter und nicht als Ersatz. Beteiligen Sie Endnutzer an jeder Designentscheidung.

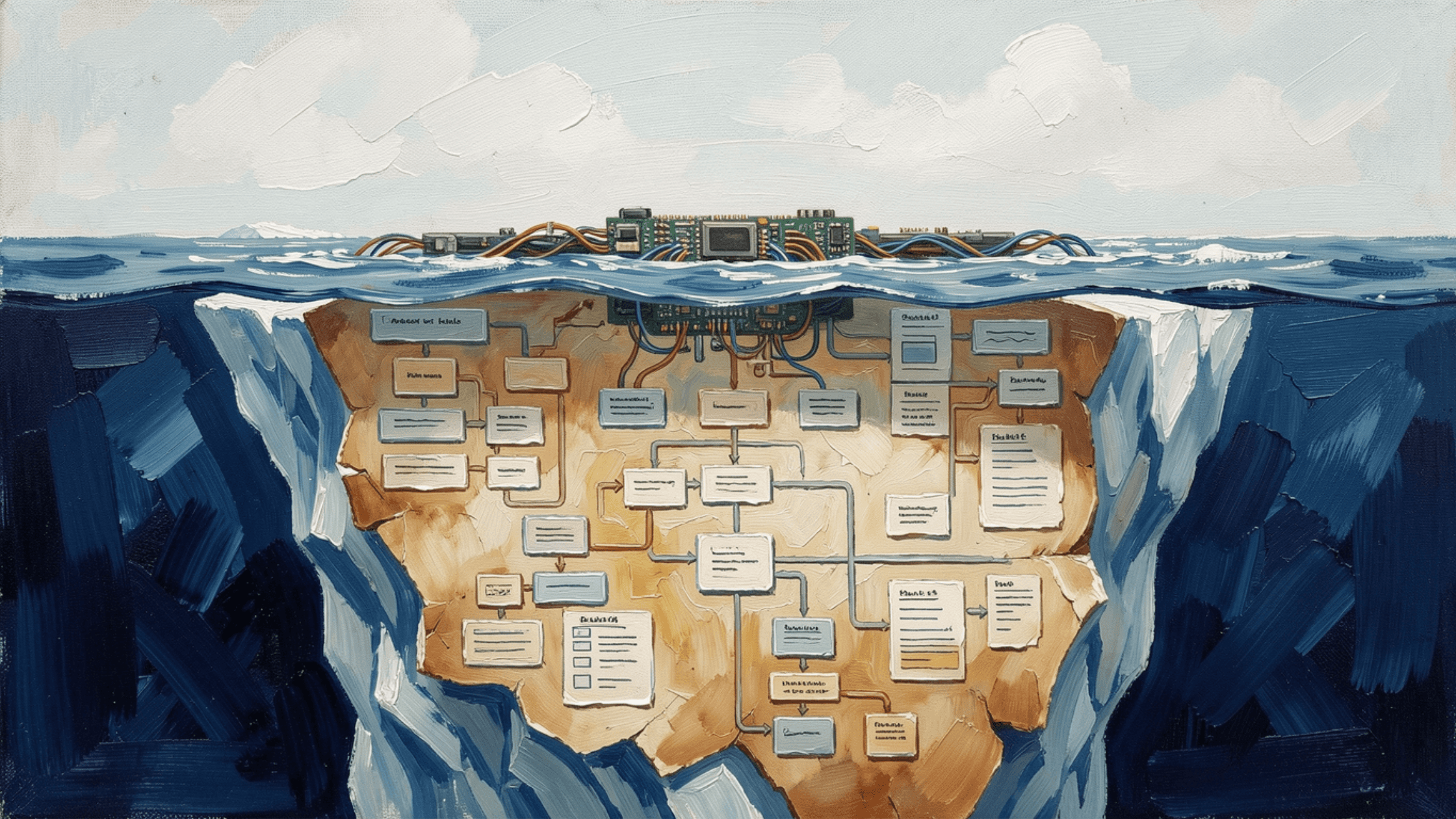

4. Keine Produktionsbereite Architektur

Der Fehler: Proof-of-Concepts bauen, die in kontrollierten Umgebungen funktionieren, aber dem Chaos der realen Welt nicht standhalten können.

Warum es scheitert: Echte Geschäftsumgebungen sind chaotisch. Datenformate ändern sich, Systeme fallen aus, Randfälle treten täglich auf.

Viele KI-Systeme funktionieren in kontrollierten Umgebungen, versagen aber, wenn sie den realen Geschäftsbedingungen mit sich ändernden Datenformaten, Systemausfällen und unerwarteten Szenarien ausgesetzt werden.

Die Lösung: Entwerfen Sie von Anfang an für den Fehlerfall. Bauen Sie Agenten, die Fehler, Systemausfälle und unerwartete Eingaben elegant handhaben.

5. Den Ozean zu kochen versuchen

Der Fehler: Beginnend mit komplexen, mehrstufigen Prozessen, die Dutzende von Systemen berühren.

Warum es scheitert: Zu viele Variablen, zu viele mögliche Fehlerpunkte, zu viel Komplexität beim Debuggen, wenn etwas schiefgeht.

Projekte, die versuchen, ganze komplexe Workflows von Anfang an zu automatisieren, scheitern typischerweise aufgrund zu vieler Variablen und möglicher Fehlerpunkte.

Die Lösung: Klein anfangen, Wert beweisen, dann erweitern. Automatisieren Sie zunächst eine spezifische Aufgabe extrem gut, bevor Sie zur nächsten übergehen.

Wie Erfolg tatsächlich aussieht: Die 10 %, die es richtig machen

Die Unternehmen, die mit agentischer KI erfolgreich sind, teilen fünf gemeinsame Merkmale:

1. Sie beginnen mit Prozessklarheit

Bevor sie eine einzige Codezeile schreiben, haben erfolgreiche Unternehmen kristallklare Dokumentationen ihrer aktuellen Prozesse. Sie wissen genau, wie „gut“ aussieht.

Beispiel: Beam AIs Ansatz mit Avi Medical beinhaltete klare Dokumentation von Patientenanfrageprozessen, wodurch das Team 81 % der Routineanfragen automatisieren konnte und dabei eine hohe Genauigkeit beibehielt.

2. Sie entwerfen für Aufsicht, nicht Autonomie

Erfolgreiche Implementierungen geben Agenten keine unbegrenzte Freiheit. Sie schaffen strukturierte Workflows mit klaren Eskalationswegen und menschlichen Kontrollpunkten.

Beispiel: In der Avi Medical Implementierung konnten Agenten routinemäßige Patientenanfragen automatisch bearbeiten, während komplexe Fälle für menschliche Überprüfung markiert wurden, um sowohl Effizienz als auch Qualitätskontrolle zu erreichen.

3. Sie messen alles

Gewinner verfolgen nicht nur Geschäftsergebnisse, sondern auch Agentenleistungsmetriken: Entscheidungsgenauigkeit, Eskalationsraten, Fehlermuster und Verbesserungen im Laufe der Zeit.

Beispiel: Avi Medicals Implementierung umfasste umfassende Überwachung der Reaktionszeiten, Automatisierungsraten und Patientenzufriedenheitsmetriken, um kontinuierliche Optimierung zu ermöglichen.

4. Sie planen für Iteration

Erfolgreiche Teams planen 40 % ihrer Projektressourcen für nachträgliche Optimierung und Verbesserung.

Beispiel: Unternehmen, die erfolgreich sind, wie diejenigen in Beam AIs Fallstudien, planen für kontinuierliche Verbesserung und Optimierung über die anfängliche Bereitstellung hinaus.

5. Sie wählen den richtigen Partner

Die Unternehmen in den 10 % bauen nicht alles von Grund auf. Sie partnern mit Plattformen, die von Tag eins für Produktionsumgebungen ausgelegt sind.

Die agentische KI-Checkliste zur Einsatzbereitschaft

Bevor Sie Ihr nächstes agentisches KI-Projekt starten, bewerten Sie ehrlich Ihr Unternehmen:

Prozessreife:

☑︎ Haben Sie klare, dokumentierte Prozesse für die Arbeit, die Sie automatisieren möchten?

☑︎ Können Sie Erfolg in spezifischen, messbaren Begriffen definieren?

☑︎ Haben Sie saubere, zugängliche Daten für die Prozesse?

Technische Einsatzbereitschaft:

☑︎ Haben Sie Systeme, die sich mit externen Agenten integrieren können?

☑︎ Ist Ihre Dateninfrastruktur produktionsbereit?

☑︎ Haben Sie Überwachungs- und Protokollierungsfunktionen?

Organisatorische Einsatzbereitschaft:

☑︎ Sind die Personen, die diese Arbeit ausüben, am Designprozess beteiligt?

☑︎ Haben Sie Unterstützung der Führung für einen 12-monatigen Zeitrahmen?

☑︎ Gibt es ein Budget für kontinuierliche Verbesserung nach dem Start?

Risikomanagement:

☑︎ Haben Sie identifiziert, was passiert, wenn der Agent versagt?

☑︎ Gibt es klare Eskalationswege zu Menschen?

☑︎ Haben Sie Compliance- und Prüfungsanforderungen abgebildet?

Wenn Sie nicht die meisten dieser Punkte abhaken können, sind Sie noch nicht bereit für agentische KI.

Der produktionsbereite Weg nach vorne

Hier ist der schrittweise Ansatz, den die 10 % verwenden, die erfolgreich sind:

Phase 1: Prozessanalyse (Wochen 1-4)

Dokumentieren Sie Ihren aktuellen Prozess im Detail

Identifizieren Sie die Aufgaben mit dem höchsten Volumen und der besten Wiederholbarkeit

Definieren Sie genau, wie Erfolg aussieht

Phase 2: Agentendesign (Wochen 5-8)

Entwerfen Sie den Agenten-Workflow Schritt für Schritt

Definieren Sie Entscheidungspunkte und Eskalationsauslöser

Planen Sie für Randfälle und Fehler

Phase 3: Kontrollierte Tests (Wochen 9-12)

Testen Sie mit echten Daten, aber in kontrollierten Szenarien

Messen Sie Genauigkeit, Geschwindigkeit und Fehlerbehandlung

Iterieren auf Grundlage tatsächlicher Leistung

Phase 4: Eingeschränkte Produktion (Wochen 13-16)

Auf eine kleine Teilmenge echter Arbeit einsetzen

Ständig überwachen und Benutzerfeedback sammeln

Agenten basierend auf realer Nutzung verfeinern

Phase 5: Skalieren und optimieren (Wochen 17+)

Die Arbeitslast des Agenten schrittweise erhöhen

Kontinuierliche Überwachung und Verbesserung

Expansion auf verwandte Prozesse planen

Warum die meisten Unternehmen diesen Rahmen überspringen (und den Preis zahlen)

Die ehrliche Wahrheit? Dieser Ansatz dauert länger und kostet mehr im Voraus als die „bauen und ausliefern“-Mentalität, die die meisten Unternehmen verwenden.

Aktuelle Daten verschlechtert das Problem: S&P Global Forschung zeigt, dass 42 % der Unternehmen die meisten ihrer KI-Initiativen im Jahr 2024 aufgegeben haben, ein dramatischer Anstieg von nur 17 % im Vorjahr. Die durchschnittliche Organisation hat 46 % der KI-Proof-of-Concepts aufgegeben, bevor sie in die Produktion gingen.

Aber hier ist, was wir aus echten Implementierungen gelernt haben:

Schneller und schmutziger Ansatz: Über 80 % Fehlerquote (laut RAND-Forschung)

Produktionsreifer Ansatz: Erheblich höhere Erfolgsquoten, mit Unternehmen wie Avi Medical, die 93 % Kosteneinsparungen und 87 % Verkürzungen der Reaktionszeit erzielen

Die Rechnung ist einfach. Die Zeit investieren, es richtig zu machen, kostet weniger, als schnell handeln und scheitern.

Echte Erfolgsgeschichte: Avi Medicals 93 % Kostenreduktion

Die Herausforderung:

Avi Medical, ein schnell wachsender Gesundheitsdienstleister, ertrinkt in Patientenanfragen. Ihr Volumen stieg rasant (3.000 Tickets pro Woche), belastete ihr Kundendienstteam und beeinträchtigte die Reaktionszeiten.

Den Fehler, den sie vermieden haben:

Sie haben nicht versucht, alles auf einmal zu automatisieren. Stattdessen konzentrierten sie sich auf routinemäßige Patientenanfragen und behielten komplexe Fälle für menschliche Agenten.

Die Beam AI-Lösung:

Eingesetzte mehrsprachige KI-Agenten, die sich in bestehende Systeme integrierten

Strukturierte Workflows für 81 % der allgemeinen Patientenanfragen erstellt

Klare Eskalationswege für komplexe Fälle geschaffen

Kontinuierliches Lernen und Feedback-Schleifen implementiert

Die Ergebnisse:

81 % der Patientenanfragen automatisiert (über 3.000 Tickets wöchentlich)

87 % Verkürzung der mittleren Reaktionszeiten

93 % Kosteneinsparungen

9 % Anstieg der Patientenzufriedenheit

Personal wurde freigesetzt, sich auf komplexe, wertvolle Fälle zu konzentrieren

Der Schlüssel: Sie behandelten die Agenten als kollaborative Teammitglieder mit klarer Aufsicht, nicht als Ersatz für menschliches Urteilsvermögen.

Das Fazit: Produktionsbereitschaft schlägt Demo-Perfektion

Die landschaft der Agenten-KI ist übersät mit schönen Demos, die den realen Geschäftsumgebungen nicht standhalten konnten.

Die Unternehmen, die erfolgreich sind, verstehen eine einfache Wahrheit: Es ist besser, einen Agenten zu bauen, der zuverlässig in der Produktion funktioniert, als zehn Agenten, die perfekt in Demos funktionieren.

Wenn Sie es ernst meinen mit der Agenten-KI, beginnen Sie damit, die richtigen Fragen zu stellen:

Was ist der einfachste, wertvollste Prozess, den wir zuerst automatisieren könnten?

Wie werden wir wissen, ob er wirklich funktioniert?

Was passiert, wenn er unvermeidlich auf etwas Unerwartetes trifft?

Wer muss involviert sein, um dies erfolgreich zu machen?

Beantworten Sie diese Fragen ehrlich, und Sie werden zu den 10% gehören, die Agenten-KI erfolgreich einsetzen.

Bereit, Agenten zu bauen, die tatsächlich funktionieren?

Der Unterschied zwischen den 90%, die scheitern, und den 10%, die erfolgreich sind, liegt nicht nur in der Strategie — es liegt an der richtigen Grundlage.

Erfolgreiche Implementierungen der Agenten-KI benötigen:

Prozessbewusstes Design, das Ihre tatsächlichen Arbeitsabläufe versteht

Produktionsbereite Architektur, die die Komplexität der realen Welt bewältigt

Kontinuierliche Lernsystheme, die sich im Laufe der Zeit verbessern

Eingebaute Überwachung, die weiß, wann sie eskalieren und Menschen einbeziehen muss

Die meisten Plattformen wurden für Demos und nicht für Produktionsumgebungen gebaut.

Sehen Sie, wie Beam AI's unternehmensgerechte Plattform die Komplexitäten bewältigt, die andere agentenbasierte Implementierungen brechen