10.02.2026

10 Min. Lesezeit

Architecting Autonomy: From Prompt Engineering to Goal-Oriented Agents

Every team that ships an LLM-powered tool hits the same wall. The demo works. The first real workload doesn’t. The model isn’t the problem — the architecture is.

Take a concrete example. A company builds an AI agent to replace its outsourced customer support. The demo works — paste a customer question, get an answer from the knowledge base. Then the team routes a full day’s tickets: 200 support requests ranging from order tracking to refund disputes to technical troubleshooting. The agent needs to check order histories, apply return policies, cross-reference warranty terms, and draft personalized resolutions. It tries to handle everything in a single pass, starts mixing up customers by ticket 50, applies the wrong return policy to a refund dispute, and sends a confident but incorrect resolution.

Early LLM applications worked like functions: prompt in, answer out. If the task fit in one pass and one context window — the amount of text a model can hold in memory at once — it worked. But multi-step reasoning, retries, or coordination across different types of work breaks the single-pass model.

The fix isn’t a better prompt. Goal-oriented agents operate in loops instead of single passes: break the objective into tasks, execute one step, check the result, adjust the plan, and repeat until done.

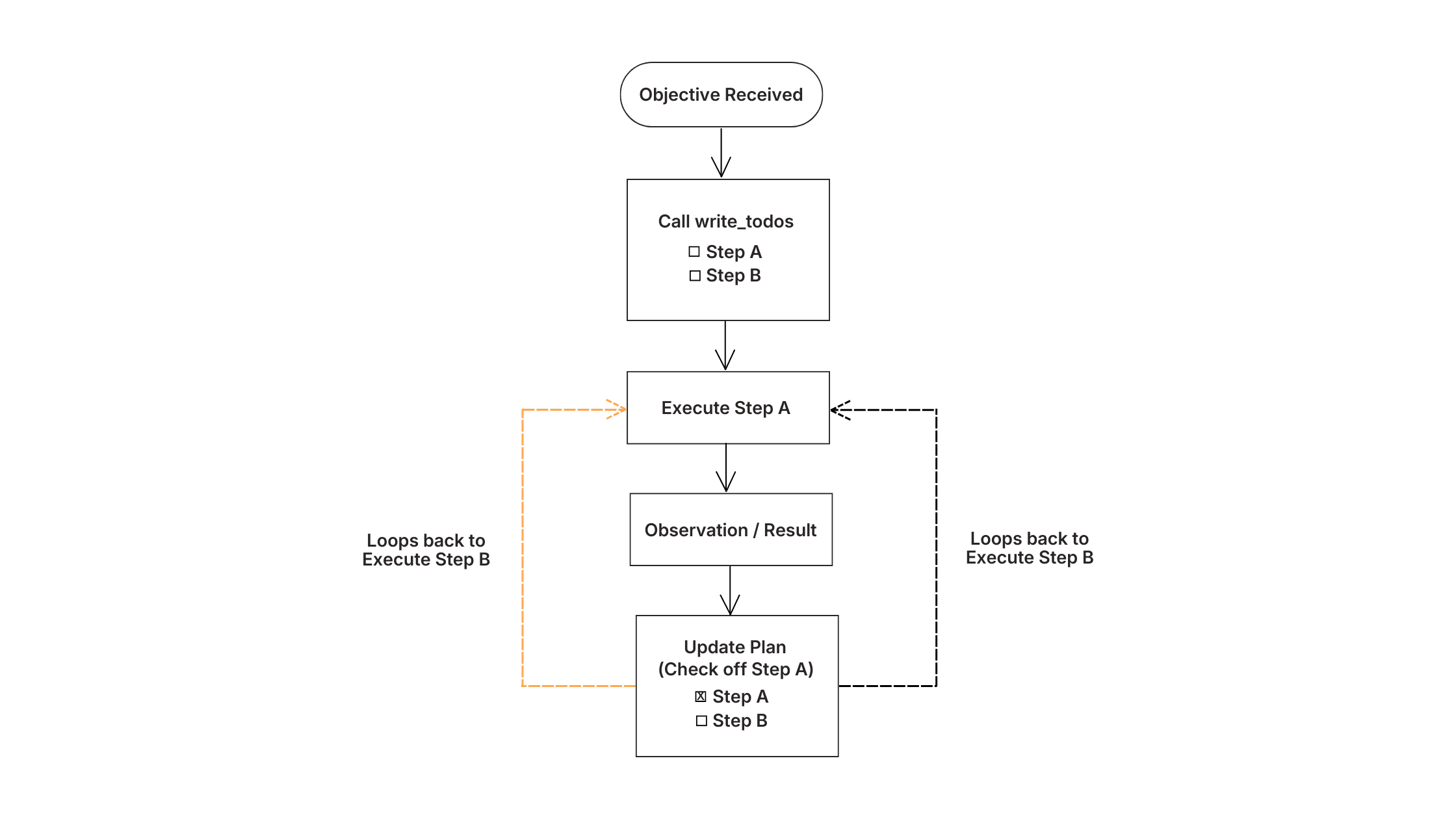

How a goal-oriented agent processes an objective: break into tasks, execute step A, check the result, update the plan, loop back until complete.

From Chains to Loops

The support agent failed because it was built as a chain — a straight line from input to output, with no ability to remember, adapt, or retry.

A chain is a stateless pipe. It processes once and moves on. It doesn’t know what it did two steps ago, can’t change course when something fails, and treats every request as if it’s seeing the world for the first time.

A goal-oriented agent is a stateful system. It tracks what it’s done, what’s left, and what changed along the way. When a step fails, it adjusts. When new information surfaces, it revises the plan.

The difference shows up in three areas:

How decisions get made. Chains follow hard-coded steps regardless of what happens. Goal-oriented agents evaluate each step’s output and decide what to do next.

How information is stored. Chains hold everything in the prompt — once the context window fills up, information is lost. Goal-oriented agents write results to external storage and pull back only what’s needed.

How work gets distributed. Chains run everything in sequence through one model. Goal-oriented agents delegate specialized tasks to separate sub-processes that run independently.

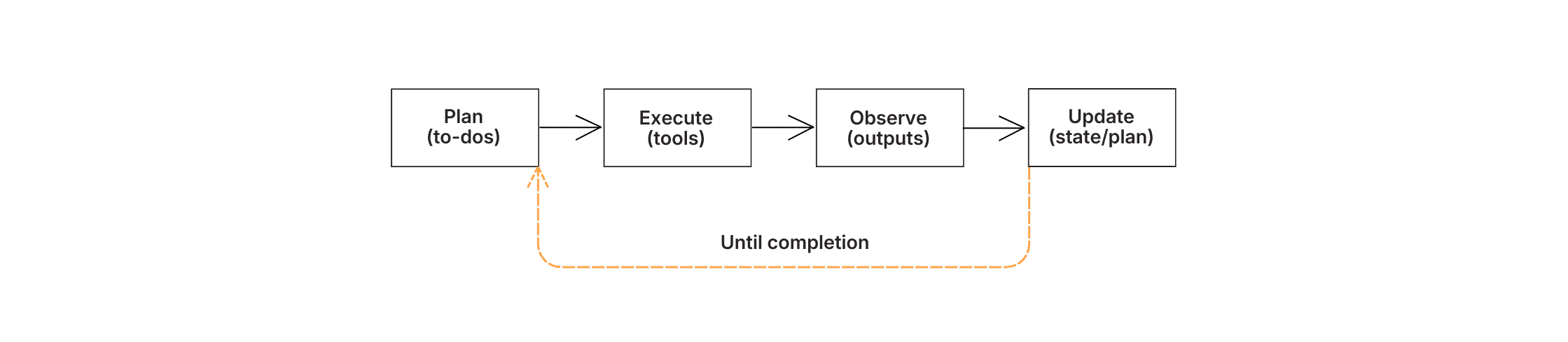

The core engineering idea is the iterative reasoning loop. Instead of assuming the model can think through the entire problem in one pass, you design for iteration:

1. Plan what needs to happen.

2. Execute one step.

3. Observe the result — did it work? Did something unexpected come back?

4. Update the plan based on what you learned.

5. Repeat until the objective is met.

Without this loop, the agent tries to resolve 200 tickets in one pass and starts mixing up customers by ticket 50. With it, each resolution is grounded in the result of the previous one.

Plan → Execute → Observe → Update — the iterative loop that lets agents self-correct instead of committing to one pass.

The Four Layers That Production Agents Converge On

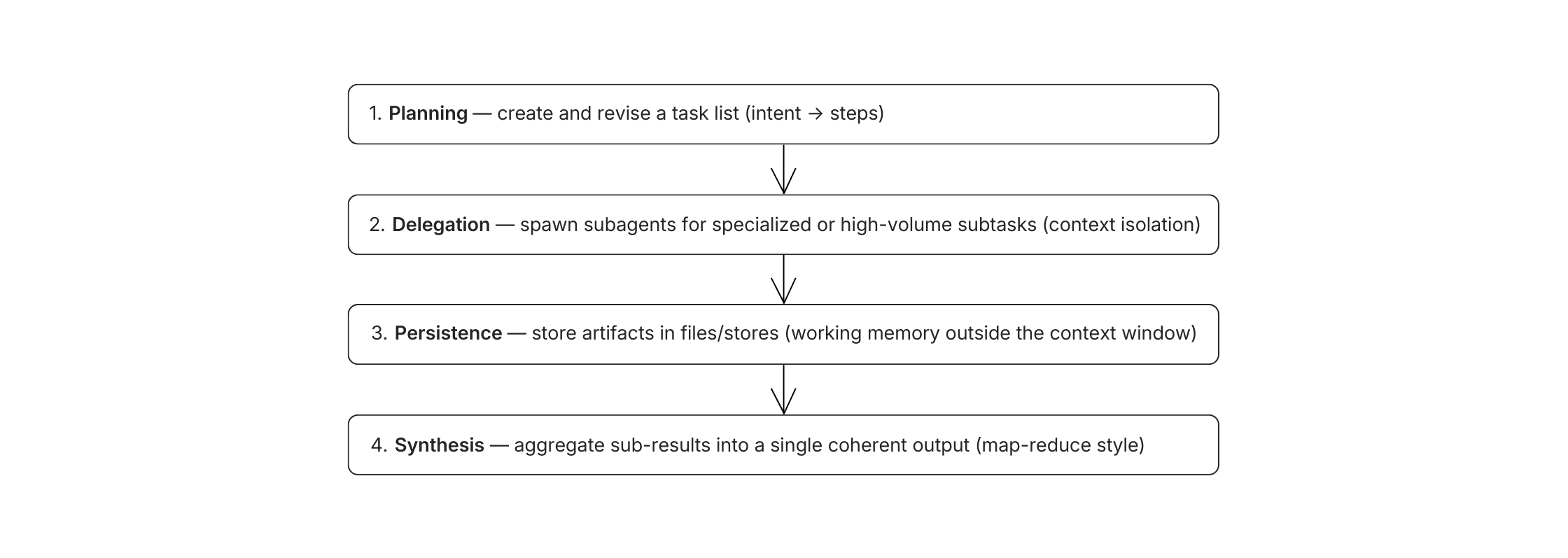

When engineering teams rebuild their single-pass agents into systems that work at scale, the resulting architectures tend to look similar. Across frameworks and industries, four layers keep appearing.

Planning creates the task list. Delegation assigns subagents. Persistence stores artifacts outside context. Synthesis merges results.

1. Planning — Separating Intent from Action

The agent doesn’t try to resolve all 200 tickets at once. It starts by creating a task list: categorize tickets by type, look up customer accounts, check order histories, apply relevant policies, draft resolutions, flag escalations.

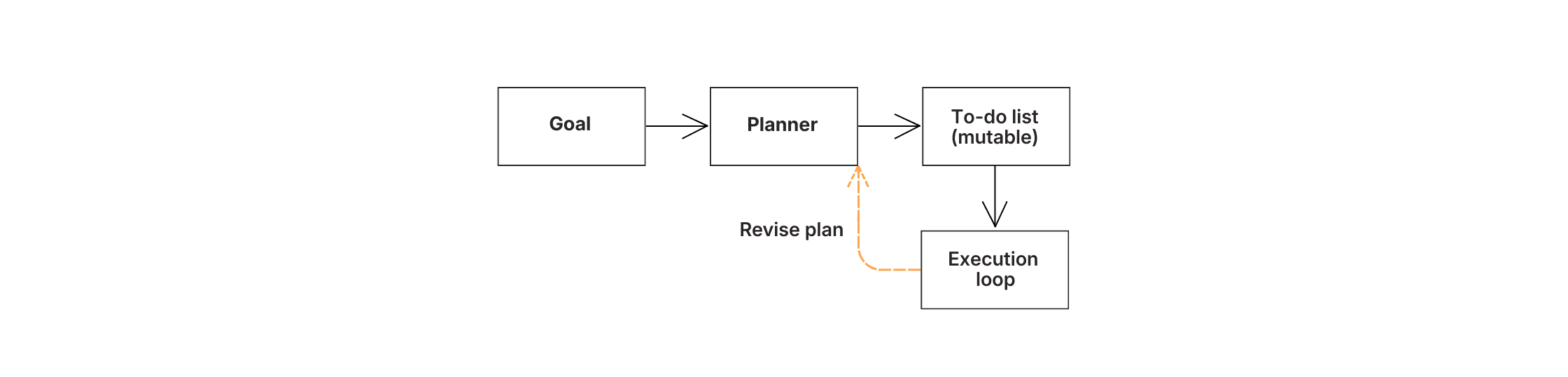

This is planning — separating what needs to happen from the act of doing it. The task list isn’t fixed. When the agent discovers that several tickets are from the same customer about the same issue, it consolidates them. When it encounters a ticket type it hasn’t seen before, it adds a new step to handle it.

Naive agents try to solve entire problems in single passes. On complex tasks, that leads to drift — the model loses track of requirements or commits early to an approach that proves wrong later. Planning prevents this by making the agent’s intentions explicit and revisable.

The planner generates a to-do list, but it’s mutable — when step 3 fails or reveals new info, steps 4-6 get rewritten automatically.

2. Delegation — Giving Specialized Work to Specialized Agents

Asking one model to simultaneously look up account data, interpret return policies, and write customer-facing responses overloads a single context with incompatible roles. The quality of all three degrades.

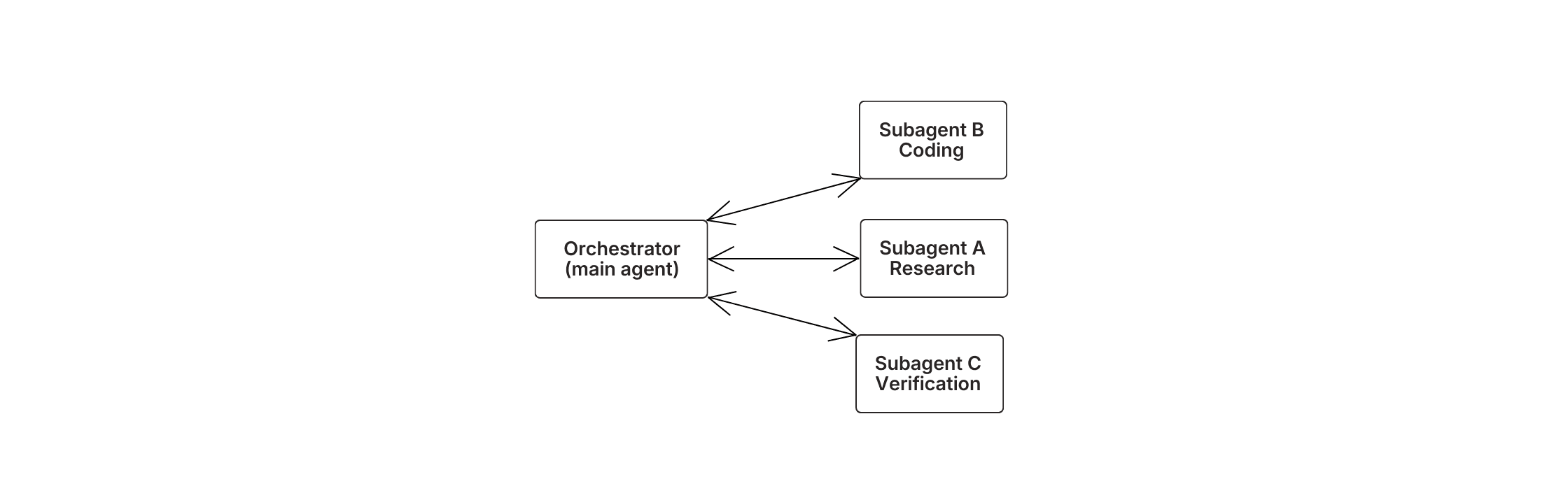

Goal-oriented agents solve this through delegation. An orchestrator — the main agent — holds the overall objective and assigns bounded tasks to specialized sub-agents. One handles account lookup and order history. Another interprets policies and determines eligibility. A third drafts customer-facing resolutions.

Each sub-agent operates in its own isolated context, focused entirely on its specific job. Only the final output flows back to the orchestrator. This keeps the main process clean and each sub-task focused.

There’s a tradeoff worth noting: when sub-agents work in parallel without sharing context, they can make conflicting decisions. For tasks that need tight coordination, running steps sequentially with compressed context often works better than parallelizing with incomplete information.

The orchestrator holds the goal. Research, coding, and verification each run in separate subagent contexts, returning only clean output.

3. Persistence — Memory Beyond the Context Window

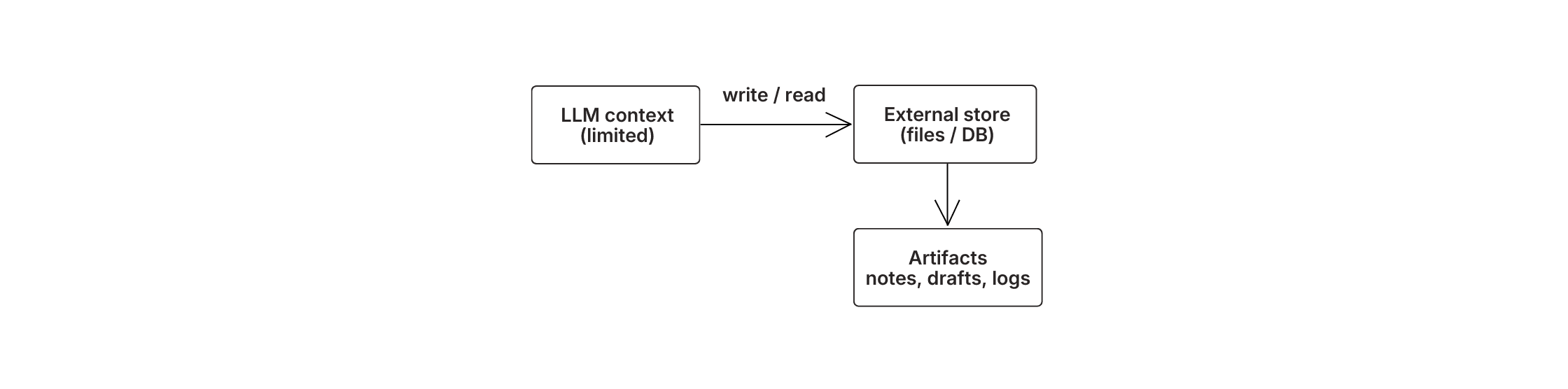

The agent needs to remember what it resolved in ticket 1 when it’s processing ticket 200. But no model can hold 200 tickets worth of customer data, order histories, and policy lookups in its context window simultaneously.

Persistence solves this by giving the agent external memory. As the agent processes each ticket, it writes results to a file or database — customer details, order lookups, policy decisions, resolution drafts. When it needs prior results, it reads back only the relevant pieces rather than keeping everything loaded.

This decouples the complexity of the work from the limitations of the model’s memory. The task can grow — 200 tickets, 2,000 tickets — without the context window becoming the bottleneck. In the support example, the agent processed 200 tickets with the same accuracy it showed on one, because each result was stored externally rather than compressed into an increasingly crowded prompt.

Agents write notes, drafts, and intermediate results to files — then read back only what’s needed, keeping context lean while tasks grow.

4. Synthesis — Merging Results into One Deliverable

Once the agent has planned, delegated, and persisted results from all 200 tickets, it needs to produce a coherent final output — not 200 separate replies, but a resolved queue with consistent policy application, flagged escalations, and a shift summary.

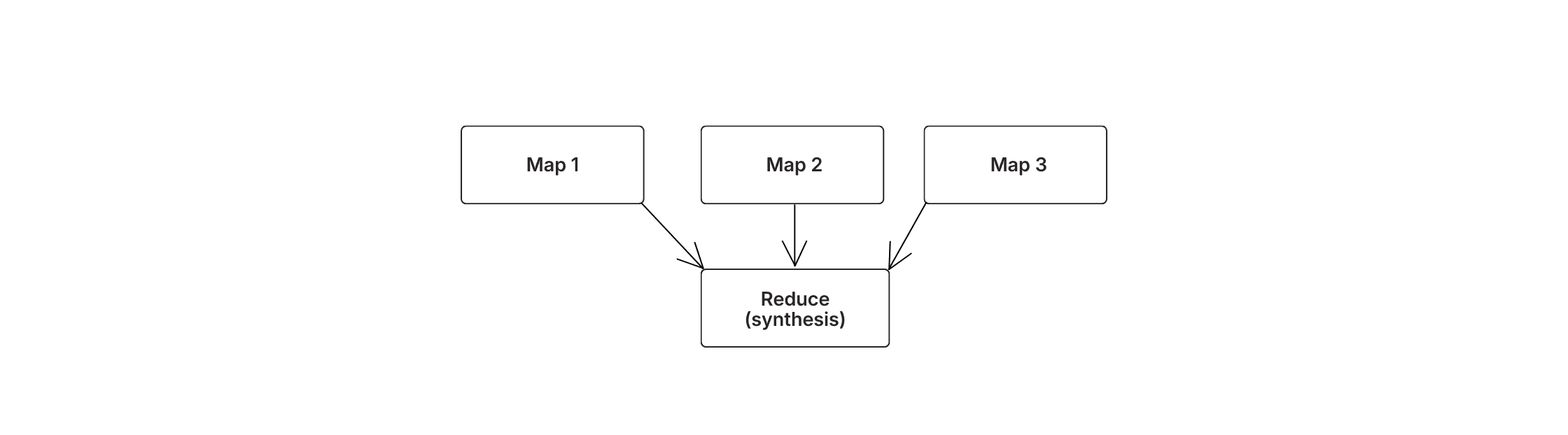

This is synthesis: merging parallel sub-results into a single deliverable. It operates in two phases:

Map. Each sub-agent completes its bounded task independently — looking up accounts, applying policies, drafting resolutions. These run in parallel or isolation, producing individual outputs.

Reduce. The orchestrator reads the stored outputs from all sub-agents, resolves inconsistencies between them, identifies patterns across tickets, and assembles the final queue with a summary report.

This map-reduce pattern is common in data processing, and it applies directly to agent workflows. The quality of the final output depends on all four layers working together — planning ensures nothing is missed, delegation ensures each piece is handled by the right specialist, persistence ensures nothing is forgotten, and synthesis ensures the pieces form a coherent whole.

Three subagents execute in parallel, then one orchestrator reconciles their outputs into a single coherent deliverable.

Where This Applies

The support agent is one example. The same architecture applies to any task where the objective is known but the exact steps aren’t.

Research and analysis. An agent reads dozens of sources, takes notes, identifies contradictions between them, and produces a structured report with citations. Without planning and persistence, it loses track of sources by the fifth document.

Code changes across large repositories. An agent implements a feature that touches 15 files, runs tests, discovers failures, fixes them, and iterates until the build passes. Without delegation, it tries to hold the entire codebase in one context.

Incident response. An agent investigates a production issue by querying logs, checking metrics, trying potential fixes, and documenting what worked. Without dynamic planning, it follows a fixed runbook even when the evidence points elsewhere.

Data-heavy analysis. An agent loads large datasets to external storage, computes summaries, and progressively refines the question without flooding context. Without persistence, it hits memory limits on the first large table.

Each of these breaks under a single-pass architecture for the same structural reasons the support agent did.

When Not to Use This

Not everything needs a goal-oriented agent. The choice depends on how predictable the work is.

Use fixed workflows when the steps are known. If the process is always the same — extract these three fields, validate against this schema, write to this database — encode it. Fixed workflows are faster, cheaper, and more predictable. They’re also easier to audit for compliance.

Use goal-oriented agents when the steps aren’t known. When tool outputs are unpredictable, when requirements evolve mid-task, or when failures need investigation rather than retries, let the agent decide the next step dynamically.

Combine them. A goal-oriented agent can invoke a fixed workflow as one of its tools — “run the standard onboarding pipeline for this customer.” A fixed workflow can hand off to an agent at designated decision points — “if validation fails, investigate the root cause and recommend a fix.”

In practice, most production systems end up using both — deterministic steps where the process is stable, agent loops where it isn’t.

What Can Still Go Wrong

Even well-architected agents introduce failure modes that don’t exist in simpler systems.

Goal drift. The agent optimizes for a proxy of the actual objective. The support agent might prioritize resolution speed over accuracy if its success criteria aren’t explicit. Mitigation: checkpoints where humans review intermediate results before the agent continues.

Unsafe actions. An agent with write access to production systems can cause damage. The support agent could auto-send resolutions to customers without human review. Mitigation: permission boundaries, sandboxed execution, and approval gates for high-impact actions.

Memory hazards. If the agent persists sensitive information to external storage, retention and access policies need to be designed upfront. What the agent remembers — and for how long — matters.

Evaluation blind spots. Single-pass systems are easy to test: input, expected output, pass or fail. Agent loops that run for dozens of steps across multiple sub-agents are harder to evaluate. Treat observability — logging every decision, action, and outcome — as a requirement from day one, not an afterthought.

The Architecture Is the Product

The support agent that failed on 200 tickets didn’t need a better model. It needed planning to break work into steps, delegation to handle different types of resolution, persistence to maintain memory across tickets, and synthesis to produce coherent output.

Goal-oriented agents apply standard engineering ideas — state management, control flow, modularity, observability — to LLMs. Once you adopt planning loops, tool-backed memory, and delegation, the system stops being a prompt and starts behaving like a program that can pursue objectives.