18 sept 2025

1 min leer

La pila de IA de Beam: Una arquitectura integral para agentes de IA empresariales

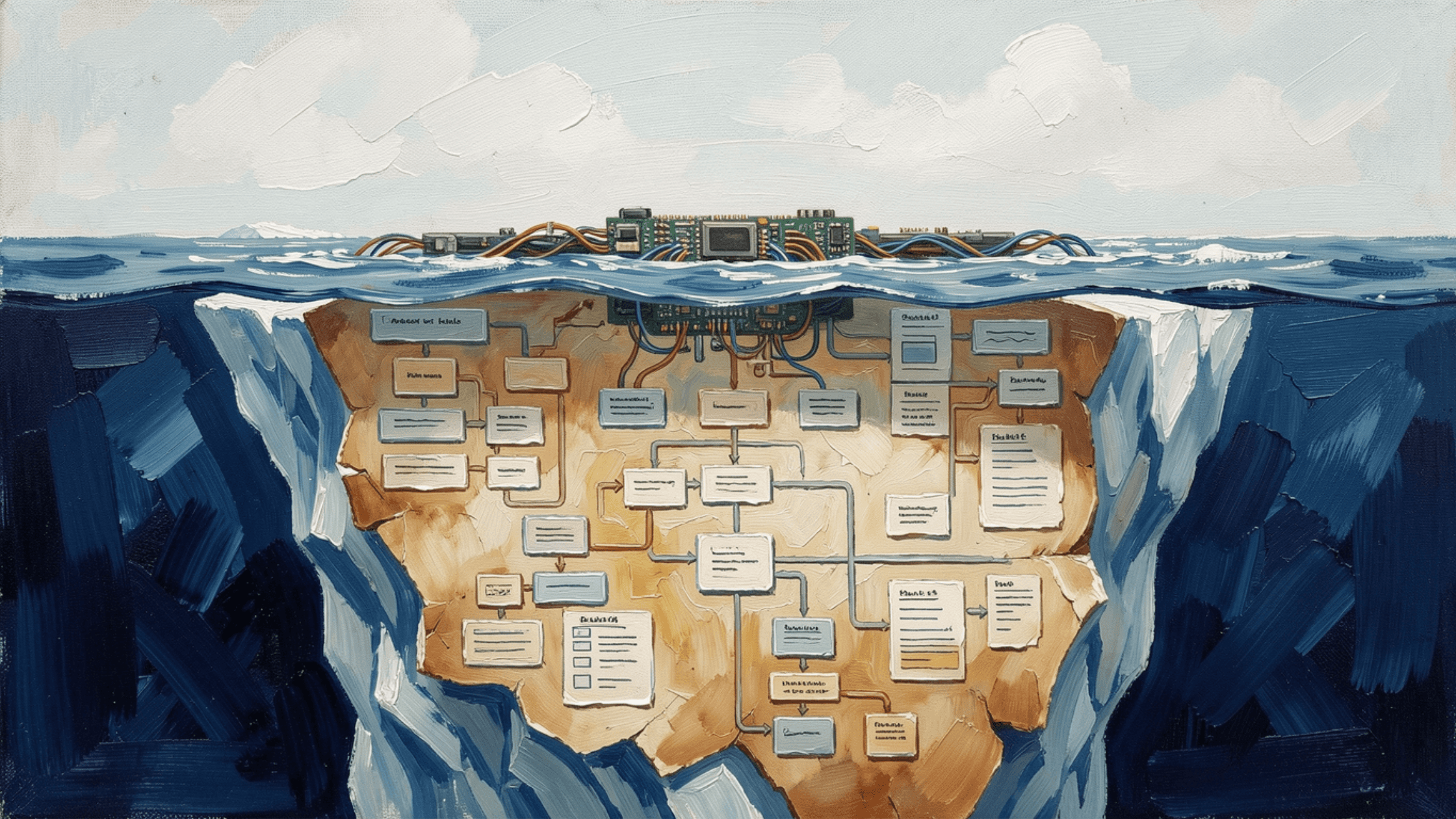

La mayoría de las empresas que intentan crear agentes de IA se enfrentan al mismo problema: alrededor del 60% de su tiempo se va en integraciones desordenadas y sólo el 40% en inteligencia real. Tienes que hacer malabarismos con LangChain para los flujos de trabajo, LiteLLM para la gestión de modelos, Composio para las integraciones, herramientas de supervisión independientes, sistemas de retroalimentación personalizados y, de alguna manera, intentar que todos funcionen juntos en producción. Mientras tanto, su línea de tiempo de "transformación de IA" se extiende de meses a trimestres ya que cada nueva herramienta trae su propia autenticación, formatos de datos y modos de fallo.

¿Y si hubiera una manera mejor?

En lugar de ensamblar un monstruo de Frankenstein de herramientas desconectadas, Beam AI proporciona la primera pila completa diseñada específicamente para agentes de producción de IA. Todas las capas, desde la infraestructura LLM hasta la experiencia del usuario, están diseñadas para funcionar juntas a la perfección.

¿El resultado? Las empresas despliegan agentes de IA fiables en semanas en lugar de trimestres, y se centran en resolver problemas empresariales en lugar de gestionar la compatibilidad de herramientas.

Así es como hemos construido la pila que maneja más de 5.000 tareas por minuto, y por qué el enfoque de "pila completa" es la única manera de construir agentes de IA que realmente funcionan en producción.

¿Preparado para saltarse la pesadilla de la integración? Vea cómo nuestra pila completa de agentes de IA elimina el 60% de los gastos generales de desarrollo y le lleva a la producción en semanas, no meses.

Preparado para saltarse la pesadilla de la integración? Vea cómo nuestra pila completa de agentes de IA elimina el 60% de los gastos generales de desarrollo y le lleva a la producción en semanas, no meses.

La pila completa: Seis capas integradas

La arquitectura de Beam AI está diseñada como un sistema integrado verticalmente. Cada capa contribuye al rendimiento, la fiabilidad y la facilidad de implementación.

Capa de infraestructura LLM

En la base de la pila de Beam AI se encuentra una capa de infraestructura LLM flexible que se integra con los principales proveedores de IA, incluidos Anthropic, OpenAI y Gemini. Este enfoque multiproveedor garantiza que las organizaciones no se queden atrapadas en un único proveedor, a la vez que permite una selección de modelos óptima para tareas específicas.

Al aprovechar diferentes modelos para diferentes propósitos, como el uso de modelos más pequeños para la clasificación y modelos más grandes para el razonamiento complejo, Beam AI mantiene tanto el rendimiento como la rentabilidad en todas las implementaciones.

Beam AI Core: APIs LLM unificadas

El Beam AI Core proporciona una capa API unificada que abstrae la complejidad de trabajar con múltiples proveedores LLM. Esta capa de estandarización maneja la autenticación, la limitación de velocidad, el manejo de errores y el formato de respuesta a través de diferentes modelos, lo que permite a los desarrolladores cambiar entre proveedores sin problemas.

El enfoque unificado también permite el cambio dinámico de modelo en función de los requisitos de la tarea, lo que garantiza que cada operación utilice el modelo más adecuado y rentable sin necesidad de realizar cambios en el código.

Beam Agent Framework

El corazón de la plataforma, el Agent Framework, engloba seis componentes críticos que transforman las capacidades brutas de IA en una automatización empresarial fiable.

Los agentes actúan como ejecutores inteligentes que perciben, razonan y actúan de forma autónoma. Los flujos proporcionan los flujos de trabajo estructurados derivados de los procedimientos operativos estándar que garantizan una ejecución determinista. Las herramientas permiten a los agentes interactuar con sistemas externos a través de más de 1500 integraciones.

La optimización de instrucciones perfecciona continuamente las instrucciones de los agentes para mejorar la precisión. La retroalimentación humana crea bucles de aprendizaje que mejoran el rendimiento del agente con el tiempo. Test & Eval proporciona marcos de evaluación completos para garantizar que los agentes cumplen los estándares de producción antes de su despliegue.

Beam Cloud

La capa Beam Cloud ofrece opciones de despliegue flexibles para satisfacer las diversas necesidades de las empresas. Las organizaciones pueden elegir despliegues autoalojados para un máximo control y soberanía de los datos, opciones de servicios gestionados para operaciones simplificadas con soporte empresarial, o la experiencia de plataforma completa (SaaS) para un despliegue y escalado rápidos.

El componente Managed Integrations/Tools proporciona conectores preconfigurados a sistemas empresariales populares como Salesforce, Gmail, Slack y Jira, lo que elimina la necesidad de desarrollar integraciones personalizadas.

Capa de experiencia del desarrollador

En la parte superior de la pila, Beam SDK y Beam Studio proporcionan potentes interfaces para crear y gestionar agentes de IA. El SDK permite a los desarrolladores crear, desplegar y gestionar agentes mediante programación utilizando sus lenguajes de programación y flujos de trabajo de desarrollo preferidos.

Beam AI ofrece un entorno visual sin código en el que los usuarios empresariales pueden diseñar flujos de trabajo de agentes, supervisar el rendimiento y gestionar despliegues sin conocimientos técnicos. Juntas, estas herramientas democratizan la creación de agentes de IA a la vez que mantienen la sofisticación necesaria para casos de uso empresariales complejos.

Esta arquitectura en capas permite a Beam AI cumplir su promesa de agentes de IA fiables y escalables que gestionan más de 5.000 tareas por minuto con una precisión superior al 90%, a la vez que mantienen los requisitos de flexibilidad y gobernanza esenciales para el despliegue empresarial.

Beneficios clave

¿Por qué es importante todo esto para su equipo? Estas son las principales ventajas:

Flexibilidad: Las múltiples opciones de despliegue y de proveedores de LLM evitan la dependencia del proveedor

Fiabilidad: Los flujos de trabajo estructurados y las pruebas exhaustivas garantizan agentes listos para la producción

Escalabilidad: Se ha demostrado que gestiona miles de tareas por minuto con un rendimiento constante

Accesibilidad: SDK de fácil uso para desarrolladores y herramientas sin código para diferentes usuarios

Integración: Los conectores preconstruidos a más de 1500 sistemas empresariales aceleran la implantación

La ventaja de la pila completa

La mayoría de las empresas que construyen agentes de IA hoy en día son como alguien que intenta construir un coche comprando un motor a una empresa, una transmisión a otra y las ruedas a una tercera, y luego espera que todo encaje. Beam AI es como tener un Tesla completo: todo está diseñado para trabajar en conjunto desde el primer día.

Por qué cada capa hace que Beam AI sea único

Vamos a desglosar cómo cada capa diferencia a Beam.

Capa | Lo que hacen los demás | Lo que hace Beam |

|---|---|---|

Infraestructura LLM + APIs unificadas | Utiliza LiteLLM para conectarte a diferentes LLMs, pero sigue gestionando tú mismo la limitación de tasas, la optimización de costes y la selección de modelos. | Se conecta a múltiples LLM y dirige de forma inteligente cada solicitud al modelo adecuado en función de la complejidad de la tarea. La clasificación simple utiliza un modelo pequeño y económico; el razonamiento complejo cambia automáticamente a GPT-4 o Claude de forma transparente, sin gestión manual. |

Marco de agentes (el corazón) | Combina LangChain/LangGraph para flujos de trabajo, DSPy para optimización de avisos, herramientas de prueba independientes y crea bucles de retroalimentación personalizados. | Proporciona seis componentes integrados, agentes, flujos, herramientas, optimización de avisos, retroalimentación humana y prueba y evaluación, que funcionan conjuntamente. La retroalimentación humana actualiza automáticamente los flujos, optimiza los avisos y activa las pruebas sin integración adicional. |

Beam Cloud (Capa de despliegue) | Configure el alojamiento, gestione los contenedores Docker, configure la supervisión, gestione el escalado y cree integraciones API por separado. | Ofrece despliegue autoalojado, gestionado o SaaS con más de 1.500 integraciones preconstruidas que funcionan desde el primer momento, eliminando el "infierno de la integración"." |

Experiencia del desarrollador (capa superior) | Los desarrolladores utilizan código mientras que los usuarios empresariales necesitan herramientas independientes, que rara vez se conectan. | Una plataforma con dos interfaces: los desarrolladores utilizan el SDK, los usuarios empresariales utilizan Studio. Ambos trabajan con los mismos agentes y flujos de trabajo. Un usuario empresarial puede diseñar un flujo de trabajo en Studio, y un desarrollador puede ampliarlo con código personalizado a través del SDK. |

La ventaja: Ahorre tiempo y dolores de cabeza

Vea cómo un enfoque integrado ahorra tiempo y dolores de cabeza.

Inteligencia integrada: Cuando su agente procesa las reclamaciones de seguros, no se limita a ejecutar pasos. Test & Eval supervisa continuamente la precisión, Human Feedback mejora las decisiones y Prompt Optimization refina el lenguaje, todo dentro del mismo sistema. Con herramientas modulares, tendría que conectar manualmente estos bucles de retroalimentación.

Sin impuesto de integración: Con herramientas modulares, el 30-40% del tiempo de desarrollo se dedica a hacer que las herramientas se comuniquen entre sí. Beam elimina esto. Todo habla el mismo idioma, utiliza los mismos formatos de datos y comparte la misma autenticación.

Una única fuente de verdad: Un panel de control muestra el rendimiento de los agentes, los costes, los errores y las mejoras. Con las herramientas modulares, tendría que comprobar varios cuadros de mando e intentar correlacionarlos usted mismo.

Listo para la producción desde el primer día: La seguridad, el cumplimiento, la supervisión y el escalado están integrados. Con enfoques modulares, se descubren estas necesidades dolorosamente con el tiempo ("Oh, necesitamos registros de auditoría para el cumplimiento... esa es otra herramienta a integrar").

Ejemplo del mundo real: Creación de un agente de atención al cliente:

Enfoque modular:

Semana 1-2: Instalar LiteLLM para la gestión de LLM

Semana 3-4: Integrar LangGraph para la orquestación del flujo de trabajo

Semana 5-6: Añadir Composio para la integración CRM

Semana 7-8: Construir un sistema de feedback personalizado

Semana 9-10: Configurar la monitorización con LangSmith

Semana 11-12: Desplegar y descubrir todos los casos límite en los que las herramientas no se llevan bien

Enfoque Beam AI:

Semana 1: Diseñar flujo de trabajo en Studio, probar con datos reales

Semana 2: Despliegue con integración CRM

Semana 3: Perfeccionamiento basado en bucles de retroalimentación automáticos

Hecho.

¿Las otras 9 semanas? Ya estás mejorando la v2 mientras los competidores aún están depurando la v1.

La conclusión

Esta completa plataforma de agentes de IA permite a Beam AI cumplir su promesa de agentes de IA fiables y escalables, al tiempo que mantiene los requisitos de flexibilidad y gobernanza esenciales para la implantación empresarial. Al abordar todas las capas, desde la infraestructura hasta la experiencia del usuario, Beam AI proporciona a las organizaciones una plataforma completa para transformar sus operaciones a través de la automatización inteligente.

La elección es clara: seguir luchando con herramientas desconectadas y pesadillas de integración, o adoptar un enfoque unificado que le lleve a la producción más rápido y le mantenga allí de forma fiable.

La solución de Beam AI es la solución de automatización inteligente más completa del mercado